Time

Blur Clock / Sun Clock

by Aidan Lincoln & Young min Choi

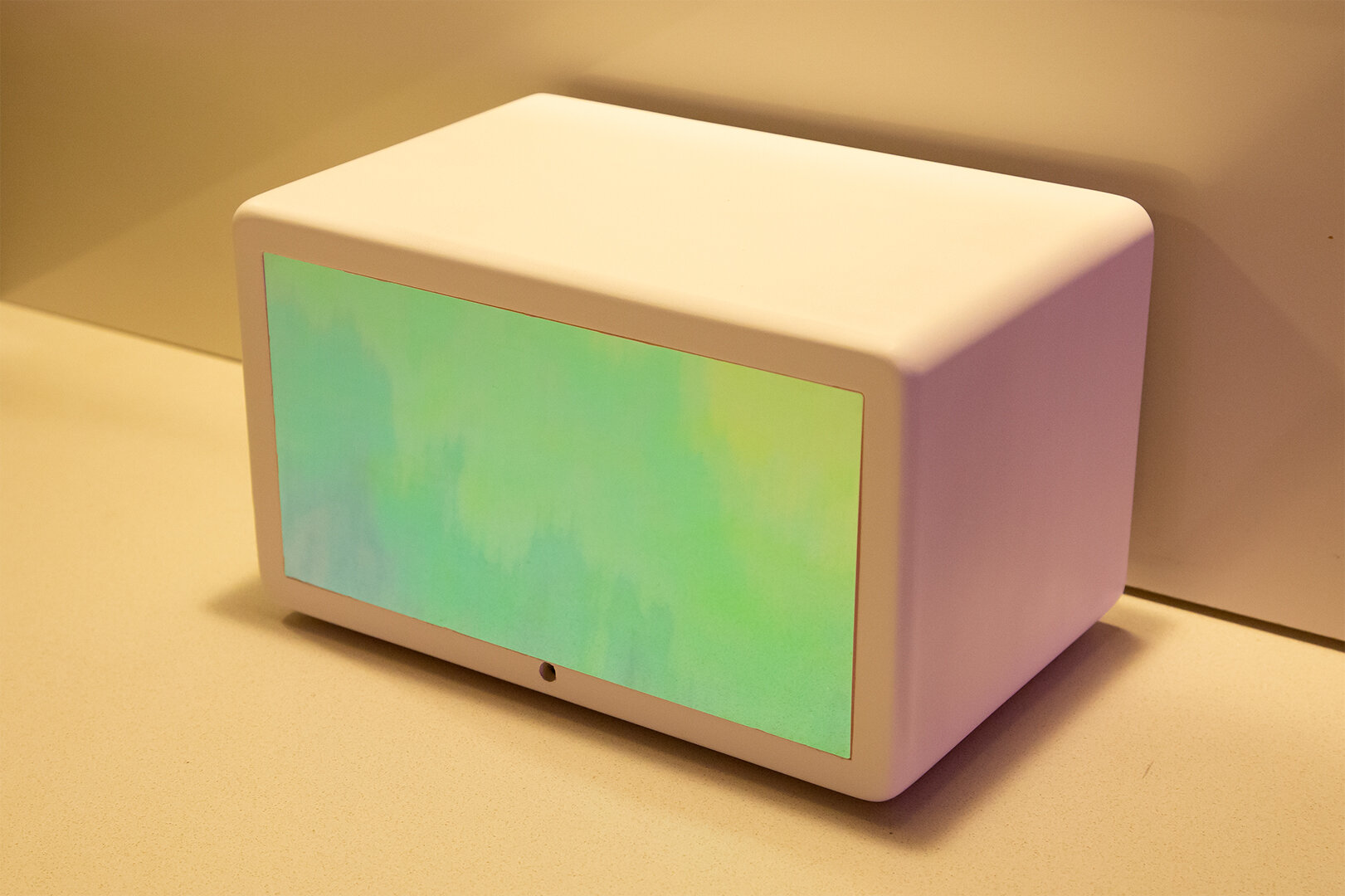

Blur Clock forces the viewer to speculate on the concept of Time, something which has become highly warped due to COVID-19, and how it is indeterminate until they measure it by looking at the clock. The clock prevents the viewer from checking the time in the usual quick nonchalant way. You can not see the time displayed on the clock unless you give it your full attention and presence for four or five seconds. While your eyes are detected and the LED screen is pushed towards the diffusion panel, making the time legible, you are forced to look at your reflection and consider yourself, your place in time, and refamiliarize what it means to check the time.

Blur Clock is inspired by The Order Of Time by Carlo Rovelli, who theorizes that time as we usually imagine it only exists because of a “blurred macroscopic perspective of the world that we encounter as human beings [and that] the distinction between past and future is tied to this blurring and would disappear if we were able to see the microscopic molecular activity of the world.” (Valerie Thompson - Science Magazine). Along with the thought that humans create time itself, Blur Clock ties in quantum mechanics and the idea that nothing exists in a determinate state until an interaction occurs or a measurement is taken. In our clock, the time is indeterminate and blurred until we measure (by looking at the clock) which causes an exact time to be visible.

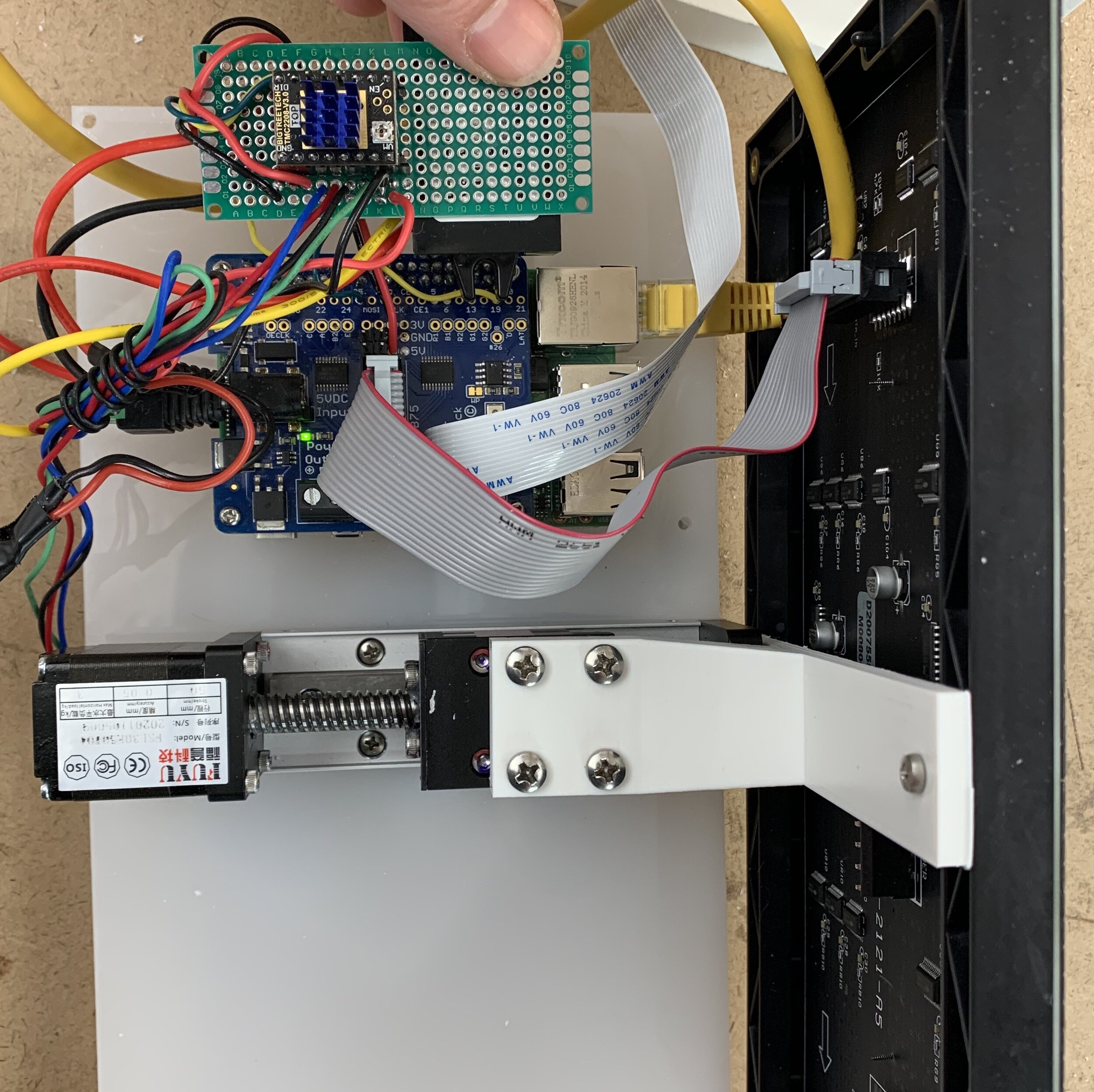

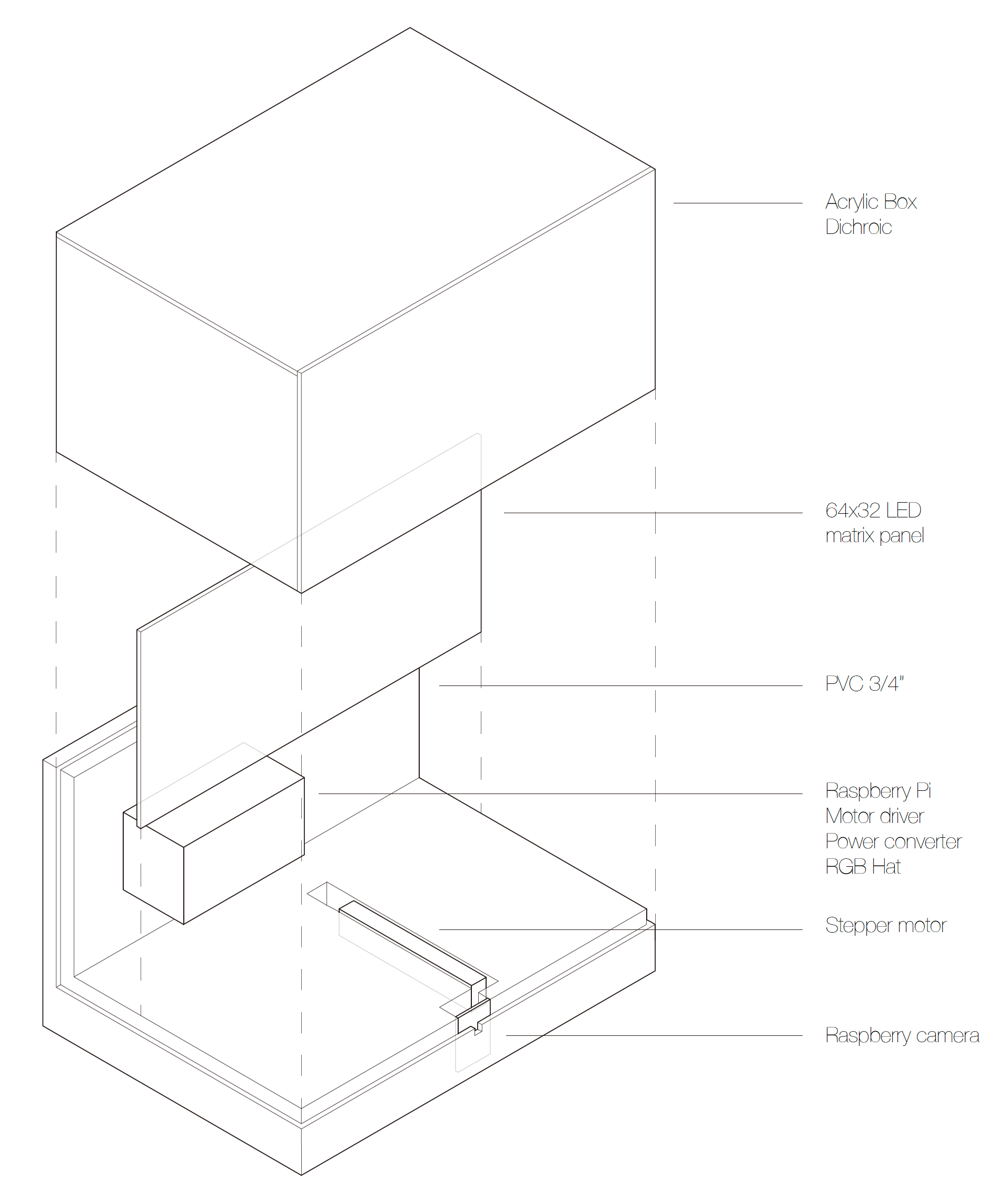

The clock consists of a Raspberry pi computer connected to a stepper motor, camera, and led matrix panel. Using OpenCV (machine learning / artificial intelligence) eye tracking, the clock knows when your eyes are present and open and triggers the motor to push the screen closer to the dichroic diffusion panel. When you look away, the led matrix recedes from view.

The box is made from 10 pieces of CNC’ed PVC sheet finely sanded and finished. As you walk by the clock the face and colors of the time or sun change color.

Version 1

When you are looking at the clock

When you are not looking at the clock

This is before the stepper stopped functioning, you can see the screen move towards me when I am looking at it and away when I move my eyes outside the range of the camera.

Technology:

The clock consists of a 64x32 LED matrix panel connected through an Adafruit RGB Matrix Hat connected to a raspberry pi. In addition to the RGB Hat, we have a webcam attached to the pi, a real time clock module, as well as a stepper motor driven linear actuator. A python script runs on startup which using GPIO pins, first calibrates the motor (pushing the led screen to the very front of the display, then moving it slightly back into it’s default state). Once the motor is calibrated, the script uses bash commands to run the separate C program that displays a clock on the LED matrix, the Pi was preconfigured to use a real time clock module so it can keep the time when turned off and does not need to be connected to a network. The script then uses OpenCV with a tensorflow trained face and eye detection model to read in the webcam data, find the face, and detect if someones eyes are open or not. If the code sees someones eyes, it triggers the motor to push the screen to the front of the clock making the numbers visible. The LEDs stay pushed to the front as long as you are looking at the clock. When you look away and the model no longer detects your eyes, the motor pulls the screen away from the front of the clock causing the numbers to blur into a purple, pink, and yellow gradient.

Issues:

Unfortunately due to a combination of the stepper actuator being cheaply made and us placing the stepper in a channel that was exactly as wide as the actuator width, over time as the stepper sides scraped the clock base, the actuator threads became stripped and the screen could not consistently be pushed back and forth without a little help from our fingers. We were able to take some early video of the clock functioning before it was completed but we did not realize it would deteriorate over time so we did not get any “good” content. In the end we were able to get some video of the blurring sort-of working. We are planning on making a fair number of adjustments and building a second version of the clock.

Fabrication:

We created the clock from acrylic on the sides and top and dichroic + acrylic on the face. The body is made from CNC’ed PVC. The clock takes in 12v and converts it down to 5V for the raspberry pi, RGB Hat, and LED screen, 12v are fed into the stepper motor controller and sent from there to the motor. The pi controls the stepper driver with GPIO pins.

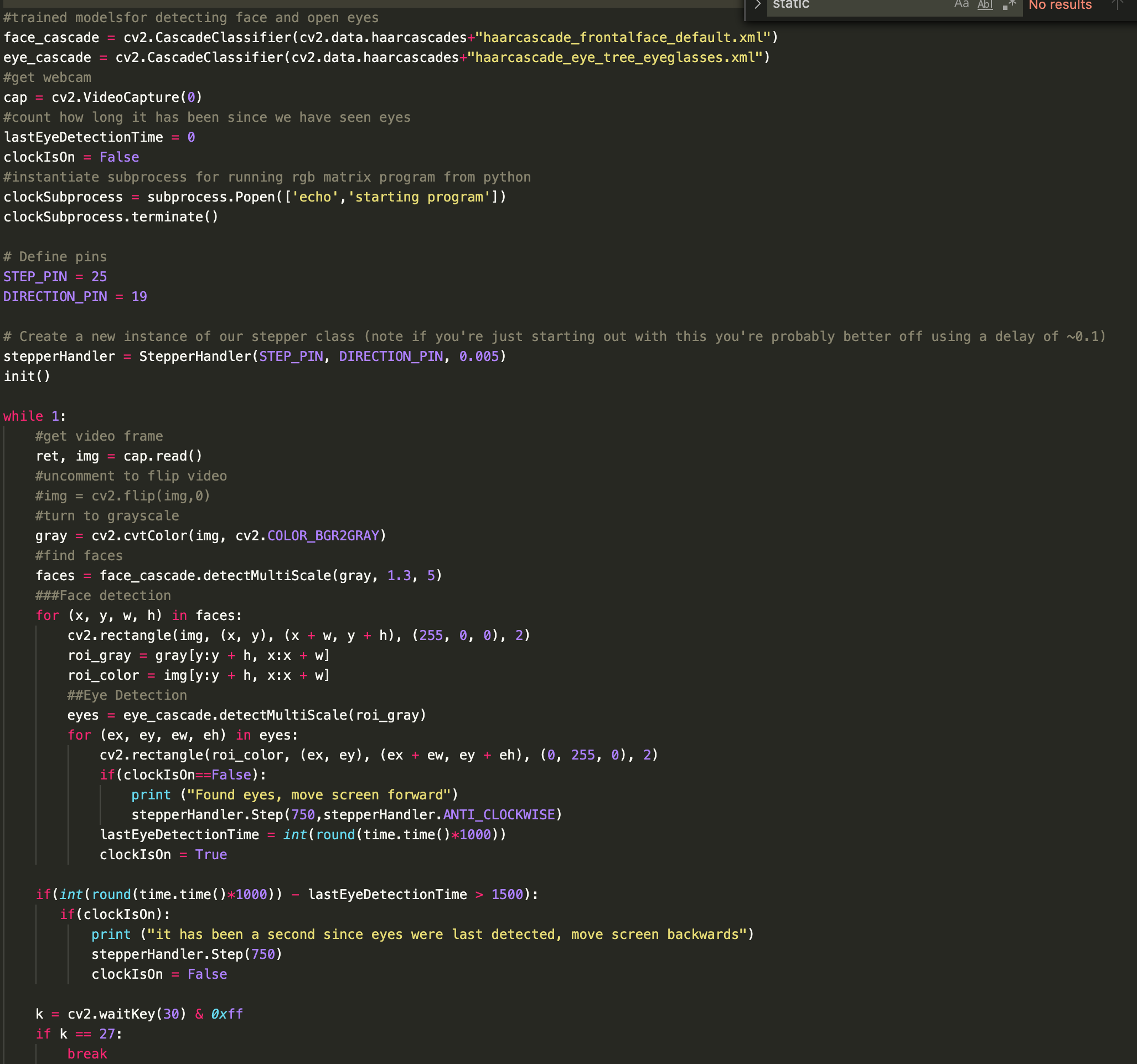

Code:

Import statements grab openCV, numpy, utilities for calling system code, time, subprocesses etc.

StepperHandler is a class that controls the stepper, init sets the direction, GPIO pin, delay between steps, steps per revolution and calls the GPIO setup routines to connect the pins.

The step method tells the motor how much to move and the direction to go.

Init defines the stepper motor, calibrates it, then calls a subprocesses opening up the C program that sends the time to the LED screen.

Next we define the tensorflow model locations (pre downloaded onto pi), tell open CV to open the webcam and define some variables to track state.

We create our stepper handler class with the correct pins and call the init function.

Then the program runs continuously unless it detects a keyboard interrupt.

We capture a frame from the webcam, do some color correction to make the image better for the model, then use the model to detect a face.

If we see a face, we then use the eye model to detect if there are open eyes in the frame.

If there are open eyes, we tell the stepper to move the screen forward.

Then we wait for 1.5 seconds without any eye detection and trigger the screen to move backwards if it was previously in the forward position.

Sketch 4: Blink Clock

In preparation for the midterm blur clock that Young-Min and I are building, I built a clock using the same LED screen and camera that we are going to be using. Instead of blurring the time when someone is not looking at it, this clock only functions when your eyes are open and you are staring at it.

The hardware consists of a raspberry pi + adafruit rgb matrix hat, a 64x32 LED matrix panel, a raspberry pi camera being used by a python script running openCV using a machine learning model created using tensorflow that tracks face and eyes (haarcascade).

When the python script sees that there are open eyes looking at the camera, it loads a C program that displays the current time from the RPI-RGB-LED-MATRIX library. The C program is “killed” when you look away from the clock, cover your eyes, or close your eyes completely making the screen go blank.

The code is too messy for me to share right now, everything included in this sketch will be used for the midterm project and I will share it then.

Sketch 3: Dichroic Sundial

For my third sketch, I created a sundial with a gnomon made from dichroic film. I found a sundial generator that creates the correct dial lines for your latitude. I cut the dial from 1/8 inch translucent acrylic and the gnomon from 1/4 inch clear acrylic with dichroic film applied to it. The thicker gnomon makes the sundial look more solid as well as gives the shadow line weight about the same thickness as the dial lines. Below is a time lapse of the sundial from sunrise to sunset on my rooftop looking at Manhattan.

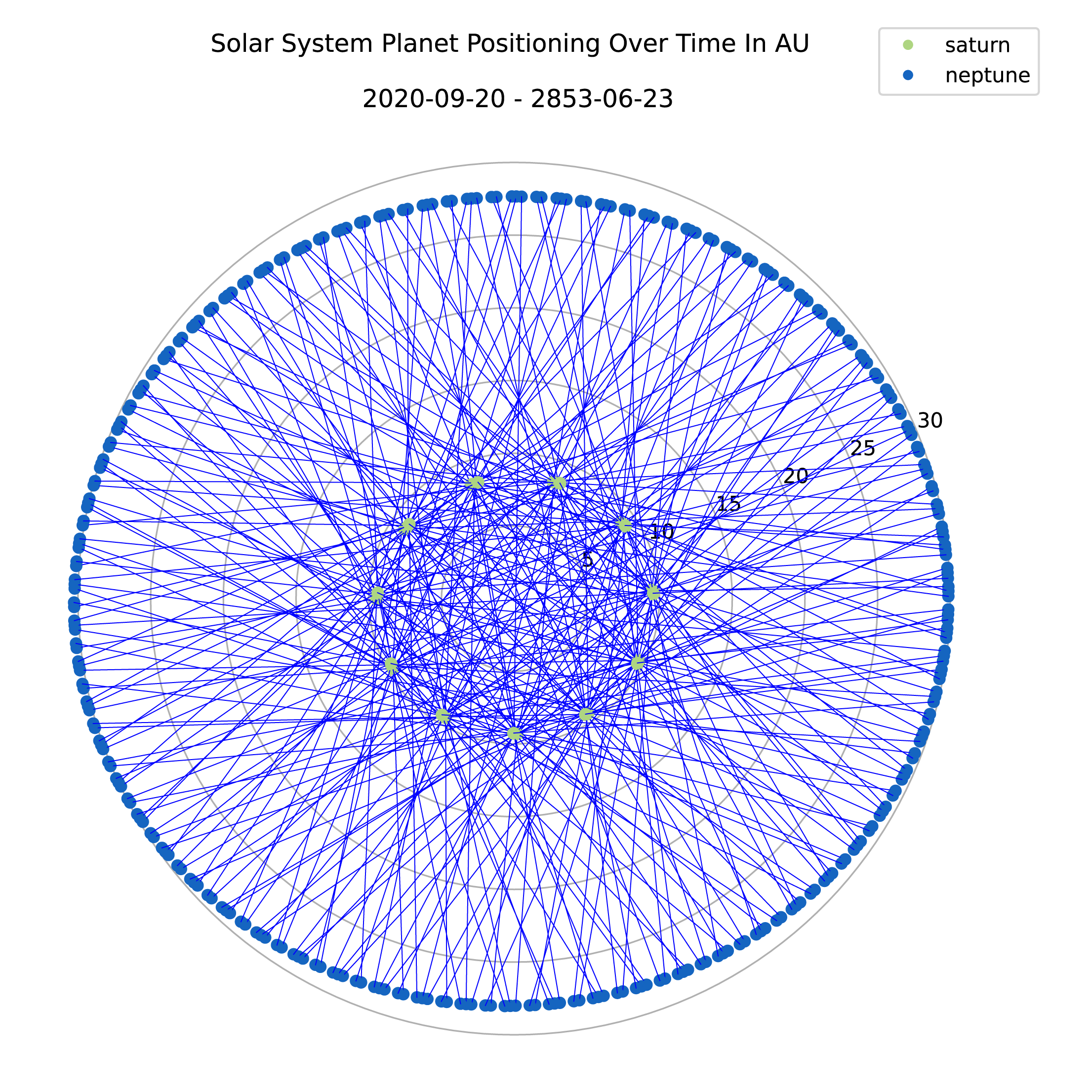

Sketch 2: AstroPy & The Real Heavenly Forms

For this sketch I recreated my abstractions of the planet movements from python using the Astropy library which allows us to get the precise location of a planet on a specific date and time. I ran quite a few simulations and found that the solar system is more elliptical than I had imagined. The actual graphs of relative planet movement over time result in slight variations from the pieces that I have already created. Perhaps this means I should make another edition of the series with the “real” graphs in the future. The left column is a graph of the relative distance between planets using exact coordinates. The right are the previous physical pieces that I created using approximations in processing.

More Simulations:

Below are some more animations that I created using AstroPy to simulate the near solar system as well as graphing the distance between multiple planets at the same time.

Sketch 1 : Heavenly Forms Exploration

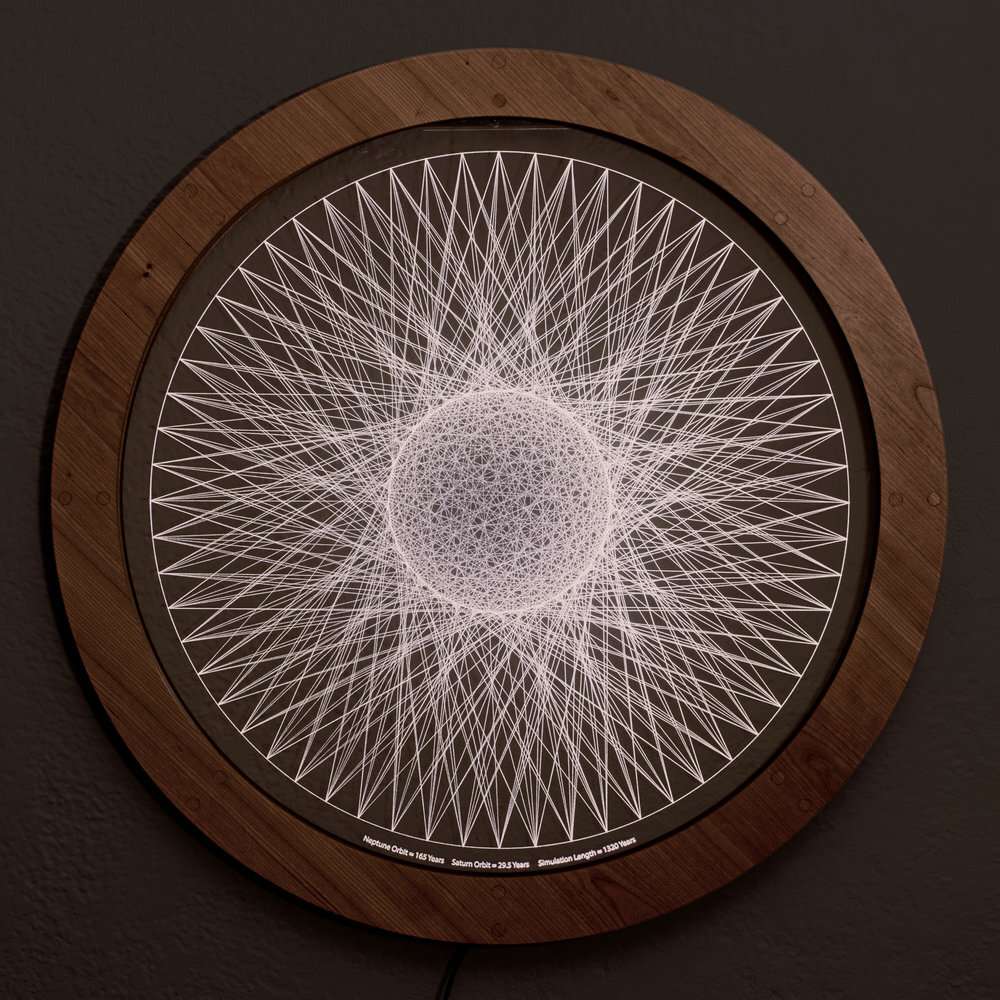

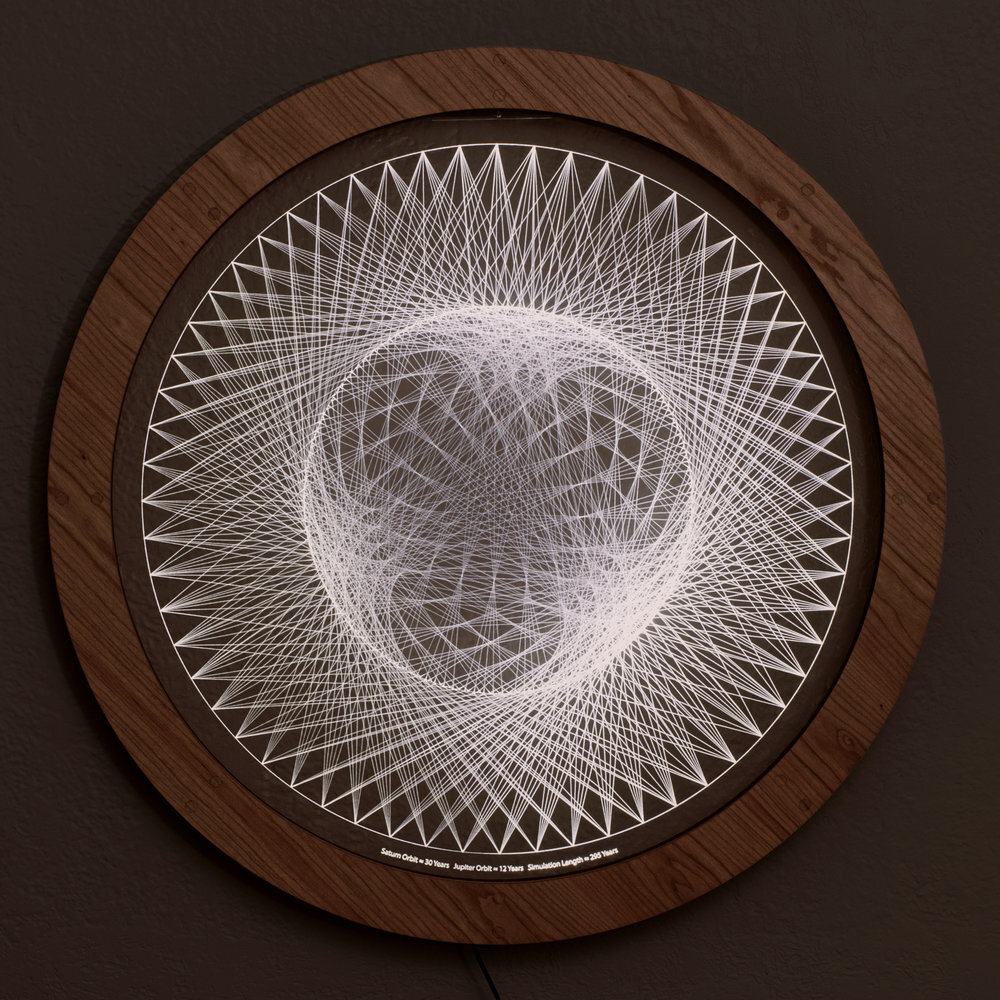

For this sketch, I continued to develop a program that I wrote a couple years ago that plots the relative movement between planet pairs. I originally used this program to produce svg files that were used in a laser cutter to engrave the relative movement of planet pairs into acrylic which is edge lit with led strips pictured below.

This week, I modified the program to graph both the relative distance between Earth and Mars on top of the relative distance between Earth and Venus. I let the simulation run for about 45 earth years. It should be noted that the actual orbits of the planets are not perfect concentric circles in real life.

Simulation used to generate vector file for piece to the right

Neptun - Uranus 8: 2019 - 46x46 inches - Acrylic, Cherry Wood, LED Strip

New simulation graphing relative orbits of Earth & Venus + Earth + Mars